The technology landscape has changed a great deal even since my first Harvard Macy blog post back in 2015. As computing power increases exponentially, we are seeing many of the technologies that were previously thought to be science fiction coming to fruition. Artificial intelligence, machine learning, neural networks, blockchain technology, augmented reality, virtual reality, and 3D printing are now making their way into common language outside of our higher education walls. Ever increasing attention has been given to technologies like augmented (AR) and virtual reality (VR), with new companies popping up every day and existing companies scrambling to expand their capabilities utilizing these technologies. In 2017 alone, venture capitalists poured over $3 billion dollars investing in AR and VR startups and the global healthcare AR/VR market is expected to hit $15 billion by 2026. Virtual and augmented reality headsets are free falling in price and rapidly hitting the consumer market with the HTC Vive and Facebook’s Oculus Rift falling from $800 in 2017 to $399-499 in 2018. Mobile based AR is rapidly gaining popularity as our everyday devices are now being supported by Apple’s ARkit and Google’s ARCore. Bringing these technologies to mobile devices will have huge implications in education and learning.

This post is not intended to be exhaustive, but rather a snapshot and examples of what technological capabilities are out there. Regardless of the technology, the adage ‘Content is King’ strongly resonates. One must remember that the technology will never make up for poor content or pedagogy. Although there is strong buzz around these technologies, I encourage everyone to be critical and see how the technology can actually add value or capabilities to the educational content without being the educational content itself. The best way to evaluate this is to ask yourself, ‘Could this content be made meaningful without this technology?’ For example, a virtual patient in VR may be cool, but are the interactions with the patient the same that could be had with much lower technology like a laptop or mobile device? In some instances, technology can actually add unnecessary cognitive load and detract from the learning experience.

This blog will detail technological advancements in the consumer and educational realm, and how medical educators are starting to use this technology to augment and, in some instances, replace existing learning experiences.

3D Visualization

3D Visualization is represented by many names including 3D rendering, computer generated graphics, and 3D graphics. Anyone who has watched an action or superhero movie in the last 10 years knows what this looks like. Essentially artists use computers to generate images representing 3 dimensional objects. For the most part, these 3D visualizations are intended to be viewed on a 2-dimensional screen. 3D graphical art has expanded rapidly and has almost become ubiquitous. Anatomy has been the most represented in this space, forcing many educators to ask whether we need human cadavers for anatomic dissection. Many companies have forayed into the interactive human anatomy atlas space including BioDigital’s 3D Interactive Human Cadaver, Medis Media’s 3D Anatomy Organon®, GraphicViZion’s Visual Anatomy 3D Human, Visible Body® Human Anatomy Atlas. Almost all of these are available as mobile apps, with some being available in virtual reality. These are all great for visualization of human anatomy in great detail and with the ability to peel away layers of skin and muscle to see the human body in all its glory. The interactivity with these models is relatively limited to rotating and zooming. Some platforms allow for links with pop-ups that give a description of the form or function of the given anatomical part.

3D models can also be derived from imaging studies such as CT and MRI scans. Various types of software can take the raw data and generate a 3D image with minimal user input. This allows rapid production of 3D anatomical models specific to individual patients, not just artistic renderings. EchoPixel’s True 3D Viewer and Body VR Anatomy Viewer can take CT scan data and create a 3D image that can be viewed on screen or in virtual reality. Anatomage has a suite of large touch screen table top virtual dissectors based off CT or MRI. Some of these technologies are used for surgical planning but can readily be utilized in the classroom or on the wards.

Virtual Reality and Augmented Reality

Virtual and augmented reality have been rapidly infiltrating the consumer space, especially in marketing and video games. It has also becoming popular in architecture, advertising, and sales. For those not familiar with these technologies, we should start with some explanations. Both virtual reality and augmented reality involve the viewing of digital information in space. These can be pictures, words, videos - any type of media out there can be viewed with these platforms. The big difference is whether or not the user can see the real world at the same time as the digital or “virtual” world.

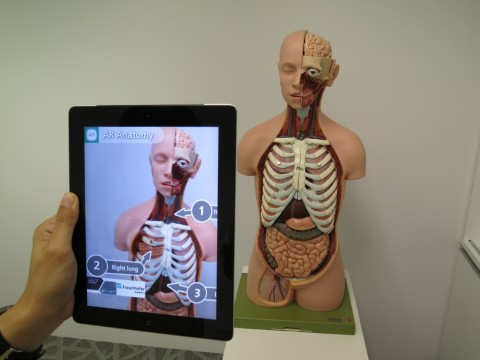

Augmented reality usually refers to the layering of digital images over a physical space.

Anyone who has seen Pokémon™ Go or Snapchat™ filters has seen augmented reality (AR). The key is that you can still see something in the physical world while you view the digital world overlay. You can often interact with the digital images that are displayed by clicking, zooming, and rotating. To view AR images, you can use every day personal devices such as tablets or smartphones or you can use specialized viewers like the Microsoft™ Hololens.

In medical education, we are just starting to see some of the capabilities realized. Many have seen the video of the collaboration between Microsoft Hololens™ and the Cleveland Clinic and HMI faculty, Dr. Neil Mehta. This is a great example of displaying a digital human in free space and has the capability of interacting and learning without the expense of a human cadaver. Training procedural modules have been developed in AR as well with procedures such as central line placement. With most smartphones now AR capable, the horizon for AR is bright in medical education. As interactivity improves beyond just image rotation, zoom, and labels we will see a stronger pressure on traditional textbooks and anatomy labs.

Virtual reality (VR) is slightly different from AR in that it is what is called “fully immersive.” This means that the digital world completely replaces the physical world and the user has no sense of the “real” physical world around them. To view VR content often specialized VR viewers are required. This list is ever expanding but include the HTC Vive™, Oculus Rift™ and Oculus Go™ from Facebook™, and Sony Playstation™ VR. There are a couple of smartphone VR kits that include the Samsung Gear VR™ and the low-cost Google™ Cardboard. The viewers have sensors both on the headset and sometimes in the room to be able to sense where in “space” the user is standing, moving, or looking. Often times the systems have controllers that allow the user to interact with the virtual world.

The biggest issue currently with VR is that anywhere from 15-20% of individuals get nauseous and dizzy from its use. This is often because the frame rate (how fast images can move with head movement) is mismatched with your body’s own perception of motion. This creates a vertigo type feeling because of the conflicting information. This is especially present with some of the lower powered and less expensive VR viewers. The more powerful VR viewers they need to be physically plugged into a computer to run which creates a physical limitation that is not present in many other systems.

Despite some of the limitations, VR brings great promise in medical education when used well. Some cool applications have been things like The Body VR: Journey Inside a Cell where you are virtually shrunk down to the cellular level and become part of the blood stream to view all the complex processes. Instead of reading about these processes on a page and having it so abstract, trainees can actually see what it might look like in VR.

In medical education, institutions are investing in AR and VR studios to leverage this technology to push forward innovative education. The Augmentarium at the University of Maryland supports research in AR and VR and has gained a new focus on educational technology research. The true capabilities of AR and VR have yet to be fully realized. Because AR and VR can support multiple users we can imagine the ability to interact as part of an interdisciplinary experience in real-time with students in another classroom or even another continent. Users can be shown in the virtual world to interact in realistic ways such as running a code, an OR emergency, or mass casualty situations.

The possibilities abound with AR and VR in healthcare education. With technology rapidly advancing in the hardware that support these technologies, we stand to see faster and better systems at lower and lower costs. Although now often cost prohibitive, with increasing competition the costs of development and hardware are already dropping making it more accessible to casual users. I am excited for the future of educational technology in healthcare education and all the new and cool applications.

* * * * * * * * * * * * * * * * *

Use technology to advance your teaching. Come to our Health Care Education 2.0 course in October with faculty Neil Mehta, Roy Phitayakorn, and Tom Aretz! We cover educational technology, instructional design, online learning, curriculum development, and more! Only a few seats remain.

Eric Gantwerker

Eric Gantwerker MD, MS, MMSc (MedEd) (Educators ’15, Leaders ’15) is a graduate of the MMSc in Medical Education Program at Harvard Medical School. His expertise is in educational technology, faculty development, motivational theory, and the cognitive psychology of learning. He is currently working clinically in New York as a pediatric otolaryngologist as well as serving as the Vice President, Medical Director of a technology company called Level Ex.

You can follow Dr. Gantwerker on Twitter @DrEricGant.